Deep Residual Shrinkage Network ekta improved variant aich Deep Residual Network ke. Vastav me, i Deep Residual Network, attention mechanisms, aa soft thresholding functions ke ekta integration thik.

Kichu had tak, Deep Residual Shrinkage Network ke working principle ke ahen samjhul ja sakait aich: i attention mechanisms ke use kair unimportant features ke identify karait aich aa soft thresholding functions ke madhyam sa unka zero set kair dait aich; dosar dis, i important features ke identify kair unka retain karait aich. I process noise sa bharal signals sa useful features extract karba me deep neural network ke ability ke enhance karait aich.

1. Research Motivation

Pahine ta, jab sample sabke classify kayal jait aich, ta noise—jaise ki Gaussian noise, pink noise, aa Laplacian noise—ke upasthiti inevitable (aparihary) aich. Vistrit roop me kahi ta, samples me aksar ahan information hoit aich je current classification task ke leel irrelevant hoit aich, jakra noise bujhal ja sakait aich. I noise classification performance par negative asar dair sakait aich. (Soft thresholding bahut raas signal denoising algorithms me ekta key step aich.)

Udaharan ke leel, yadi road ke kinar baat bha rahal aich, ta audio me gari ke horn aa chaka ke aawaj mix bha sakait aich. Jab ahen signals par speech recognition kayal jayet, ta results par in background sounds ke asar parba tay aich. Deep learning perspective sa dekhal jay, ta deep neural network ke bhitar horn aa chaka sab sa jural features ke eliminate kair deba chahi taki speech recognition results par kono asar nai paray.

Dosar baat, same dataset ke bhitar bhi, alag-alag sample me noise ke amount aksar vary karait aich. (I attention mechanisms sa milait-julait aich; image dataset ke example li, ta target object ke location alag-alag image me alag bha sakait aich, aa attention mechanisms har image me target object ke specific location par focus kair sakait aich.)

Udaharan ke leel, jab cat-and-dog classifier train kayal jait aich, ta “dog” label wala paanch ta image par vichar karu. Pahil image me kukur ke sang mus (mouse) bha sakait aich, dusar me hans (goose), tesar me murgi (chicken), charim me gadha (donkey), aa panchum me batakh (duck) bha sakait aich. Training ke time, classifier irrelevant objects jaise ki mus, hans, murgi, gadha, aa batakh sa disturb bha sakait aich, jahi sa classification accuracy kam bha jait aich. Yadi hum in irrelevant objects—mus, hans, murgi, gadha, aa batakh—ke identify kair saki aa unkar corresponding features ke eliminate kair saki, ta cat-and-dog classifier ke accuracy improve kayal ja sakait aich.

2. Soft Thresholding

Soft thresholding bahut raas signal denoising algorithms me ekta core step aich. I ahen features ke eliminate karait aich jakar absolute values ekta certain threshold sa kam aich aa un features ke zero ke taraf shrink karait aich jakar absolute values i threshold sa besi aich. I niche deyal formula sa implement kayal ja sakait aich:

\[y = \begin{cases} x - \tau & x > \tau \\ 0 & -\tau \le x \le \tau \\ x + \tau & x < -\tau \end{cases}\]Input ke respect me soft thresholding output ke derivative aich:

\[\frac{\partial y}{\partial x} = \begin{cases} 1 & x > \tau \\ 0 & -\tau \le x \le \tau \\ 1 & x < -\tau \end{cases}\]Jaisa ki upar dekhaol gel aich, soft thresholding ke derivative ya ta 1 aich ya 0. I property ReLU activation function ke saman aich. Tahi leel, soft thresholding deep learning algorithms me gradient vanishing aa gradient exploding ke risk ke kam kair sakait aich.

Soft thresholding function me, threshold set karait kal du ta condition satisfy karab jaruri aich: pahil, threshold positive number hoba chahi; dusar, threshold input signal ke maximum value sa besi nai hoba chahi, nai ta output puri tarah sa zero bha jayet.

Sange-sang, i bhi preferable aich ki threshold tesar condition ke satisfy karay: har sample ke apan noise content ke hisab sa apan independent threshold hoba chahi.

Aisa tahi leel ki noise content aksar samples ke beech vary karait aich. Udaharan ke leel, same dataset me i common aich ki Sample A me kam noise ho aa Sample B me besi noise ho. Ahen case me, jab denoising algorithm me soft thresholding kayal jay, ta Sample A ke leel chhot threshold use hoba chahi, jabki Sample B ke leel bara threshold use hoba chahi. Halanki deep neural networks me in features aa thresholds ke explicit physical definitions khatam bha jait aich, muda basic underlying logic wahi rahit aich. Kahba ke matlab i ki, har sample ke pas apan specific noise content ke hisab sa determine kayal gel apan independent threshold hoba chahi.

3. Attention Mechanism

Computer vision ke field me Attention mechanisms ke samjhab relatively aasan aich. Janwar sab ke visual systems pura area ke rapidly scan kair ke targets distinguish kair sakait aich, aa fir target object par attention focus kair ke irrelevant information suppress karait huye details extract kair sakait aich. Vishesh jankari ke leel, kripya attention mechanisms se jural literature dekhu.

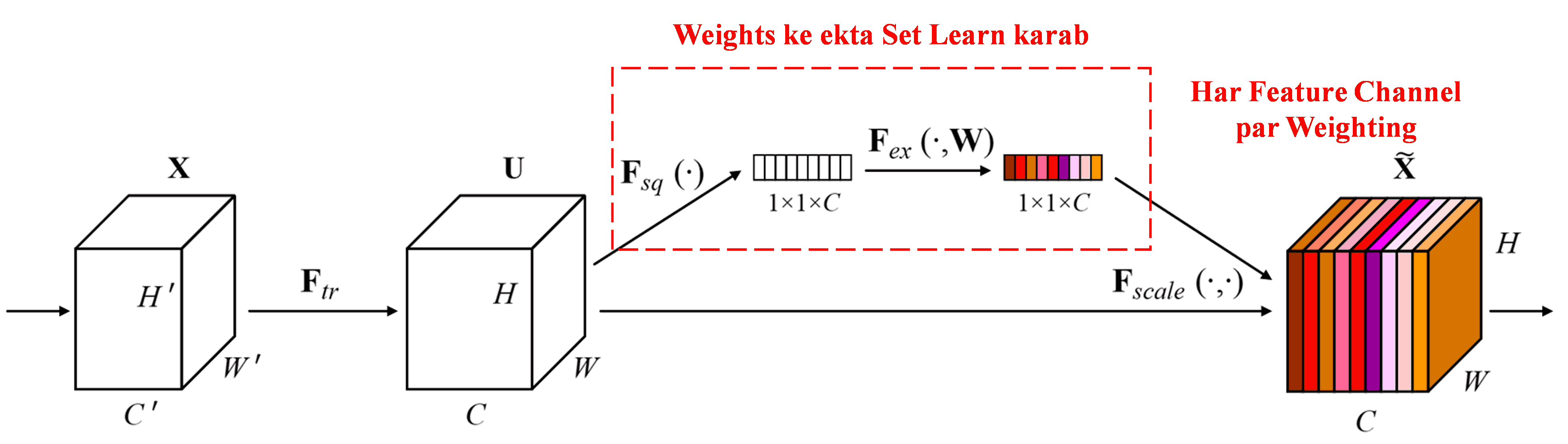

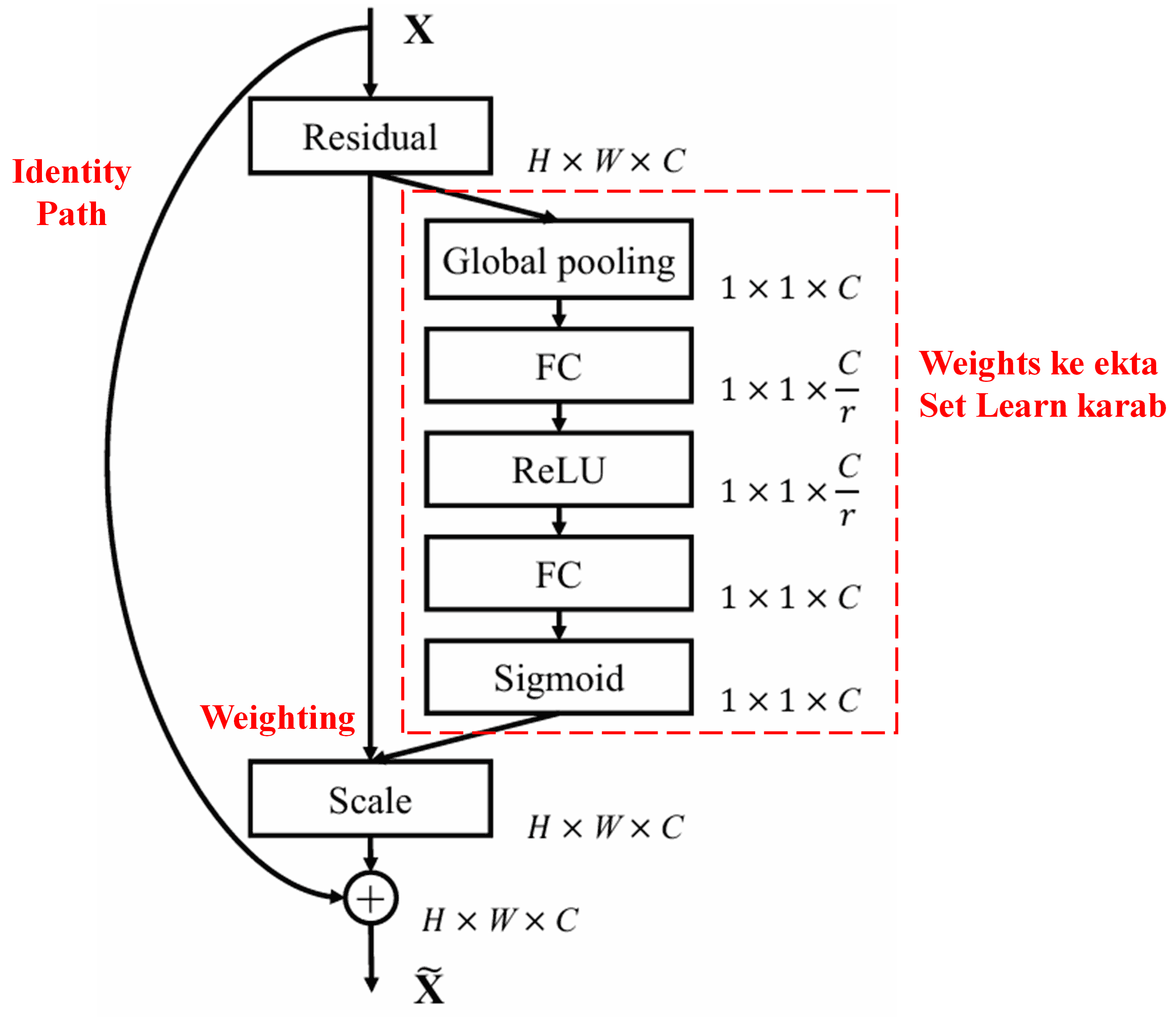

Squeeze-and-Excitation Network (SENet) attention mechanisms use karay wala ekta relatively naya deep learning method aich. Alag-alag samples me, classification task ke leel different feature channels ke contribution aksar vary karait aich. SENet ekta set weights prapt karba ke leel ekta chhot sub-network employ karait aich aa fir in weights ke respective channels ke features sa multiply karait aich taki har channel me features ke magnitude adjust kayal ja sakay. I process ke ahen dekhal ja sakait aich ki different feature channels par varying levels of attention apply kayal ja rahal aich.

I approach me, har sample ke pas weights ke apan independent set hoit aich. Dosar shabd me kahi, ta kono bhi du ta arbitrary samples ke weights alag-alag hoit aich. SENet me, weights prapt karba ke specific path aich “Global Pooling → Fully Connected Layer → ReLU Function → Fully Connected Layer → Sigmoid Function.”

4. Soft Thresholding with Deep Attention Mechanism

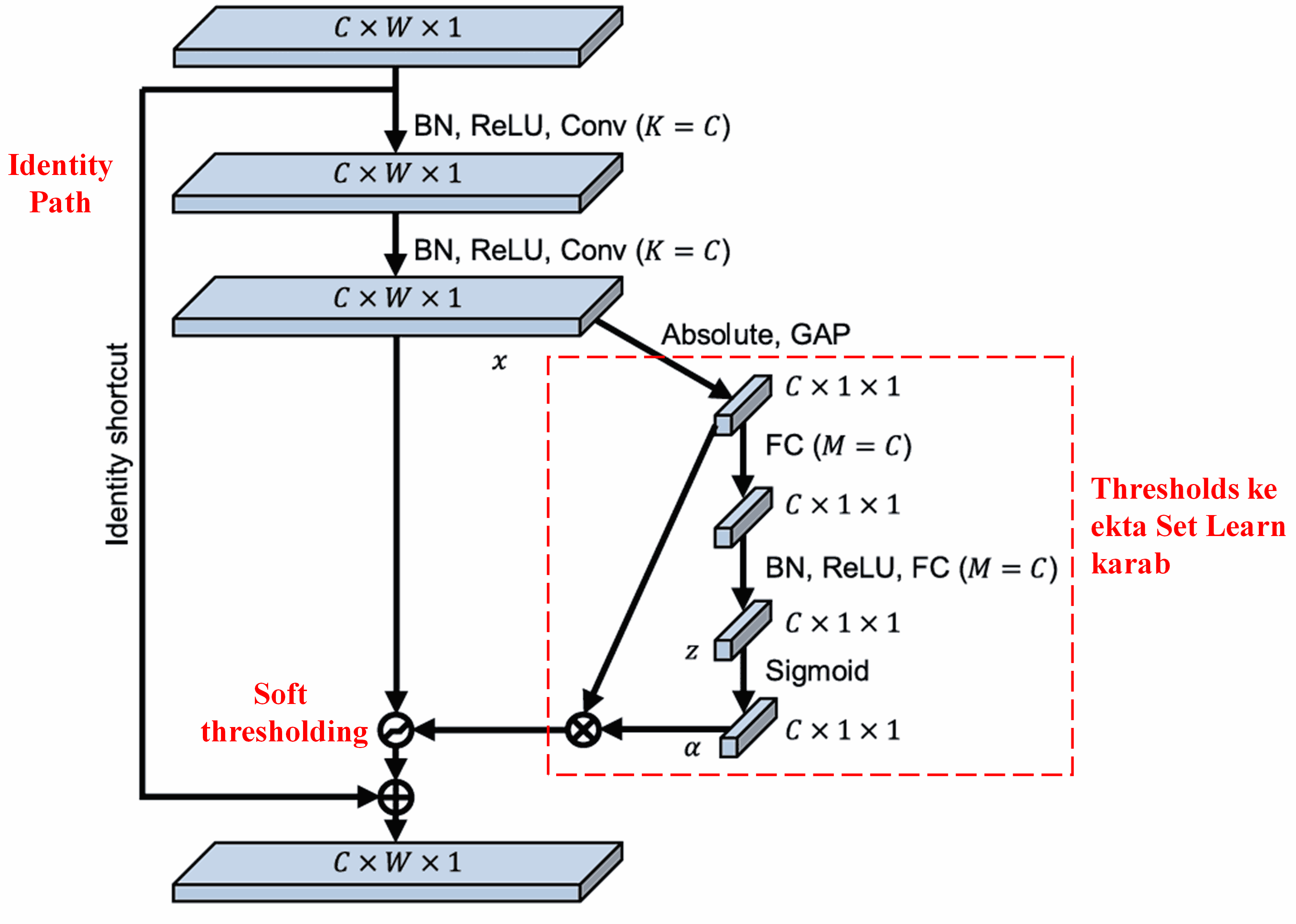

Deep Residual Shrinkage Network upar bataol gel SENet sub-network structure sa prerna laiit aich taki deep attention mechanism ke tahat soft thresholding implement kayal ja sakay. Sub-network (je lal box me dekhaol gel aich) ke madhyam sa, thresholds ke ekta set learn kayal ja sakait aich taki har feature channel par soft thresholding apply kayal ja sakay.

I sub-network me, sabse pahine input feature map ke sabhi features ke absolute values calculate kayal jait aich. Fir, global average pooling aa averaging ke madhyam sa, ekta feature prapt kayal jait aich, jakra A kahal gel aich. Dusar path me, global average pooling ke baad feature map ke ekta small fully connected network me input kayal jait aich. I fully connected network apan final layer ke roop me Sigmoid function use karait aich taki output 0 aa 1 ke beech normalize kayal ja sakay, jahi sa ekta coefficient milait aich jakra α kahal gel aich. Final threshold ke α × A ke roop me express kayal ja sakait aich. Tahi leel, threshold 0 aa 1 ke beech ke number aa feature map ke absolute values ke average ke product (gunanphal) aich. I method ensure karait aich ki threshold na sirf positive ho balki excessive large (bahut bara) bhi nai ho.

Sanghi, different samples ke result different thresholds hoit aich. Phalaswaroop, kichu had tak, ekra ekta specialized attention mechanism samjhal ja sakait aich: i current task ke leel irrelevant features ke identify karait aich, unka du ta convolutional layers ke madhyam sa zero ke close values me transform karait aich, aa soft thresholding use kair unka zero set kair dait aich; ya fir, i current task ke leel relevant features identify karait aich, unka du ta convolutional layers ke madhyam sa zero sa dur values me transform karait aich, aa unka preserve karait aich.

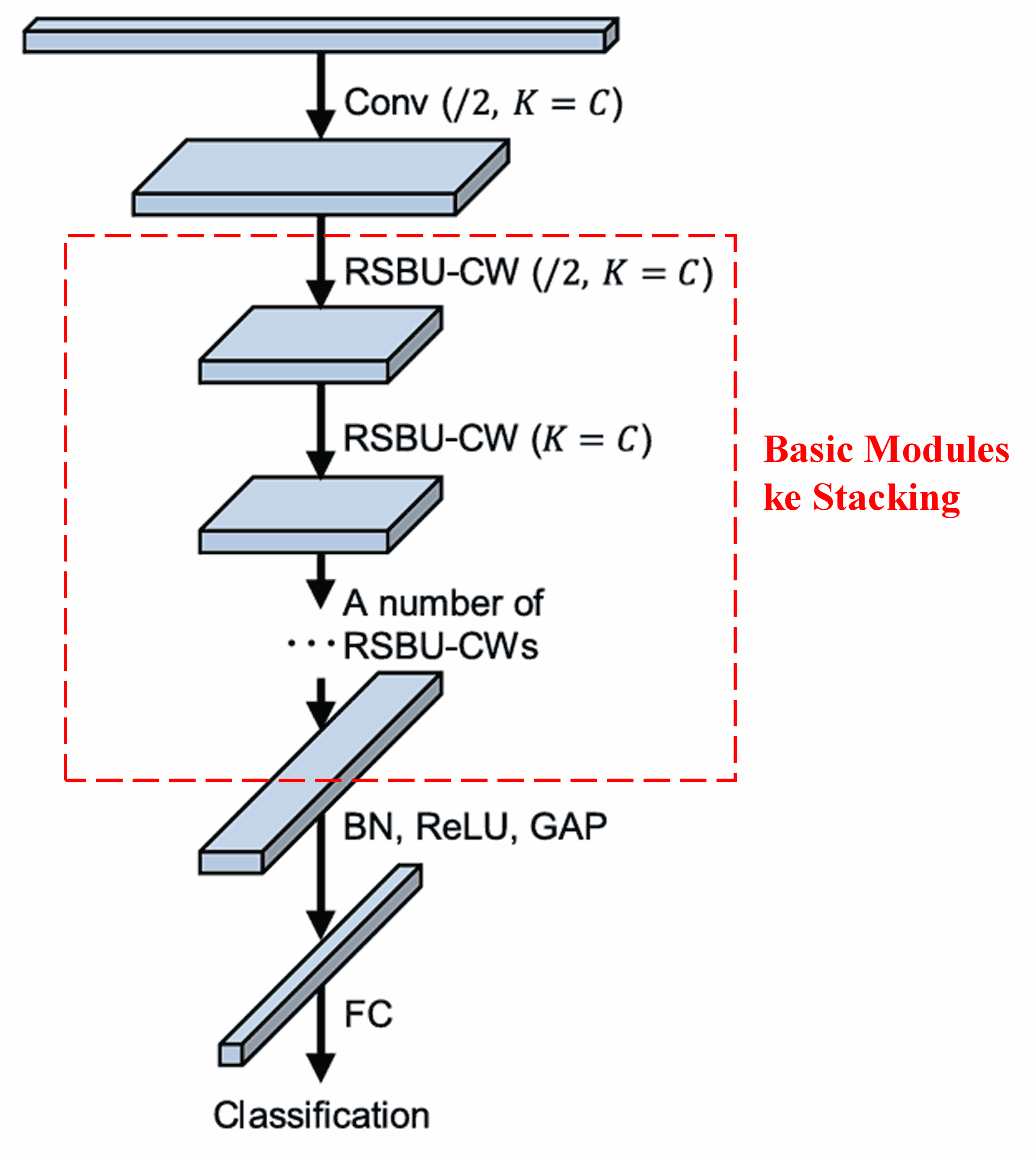

Ant me, convolutional layers, batch normalization, activation functions, global average pooling, aa fully connected output layers ke sang kichu sankhya me basic modules ke stack kair ke, complete Deep Residual Shrinkage Network construct kayal jait aich.

5. Generalization Capability

Deep Residual Shrinkage Network, vastav me, ekta general feature learning method aich. Aisa tahi leel ki, bahut raas feature learning tasks me, samples me kam-besi kichu noise aa irrelevant information hoit aich. I noise aa irrelevant information feature learning performance ke affect kair sakait aich. Udaharan ke leel:

Image classification me, yadi ekta image me ek sang bahut raas anya objects aich, ta in objects ke “noise” samjhal ja sakait aich. Deep Residual Shrinkage Network attention mechanism ke use kair is “noise” ke notice kair sakait aich aa fir soft thresholding employ kair in “noise” corresponding features ke zero set kair sakait aich, jahi sa image classification accuracy improve bha sakait aich.

Speech recognition me, vishesh roop sa relatively noisy environments jaise ki road kinar conversational settings ya factory workshop ke bhitar, Deep Residual Shrinkage Network speech recognition accuracy improve kair sakait aich, ya kam sa kam, ekta ahen methodology offer kair sakait aich je speech recognition accuracy improve karba me saksham ho.

Reference

Minghang Zhao, Shisheng Zhong, Xuyun Fu, Baoping Tang, Michael Pecht, Deep residual shrinkage networks for fault diagnosis, IEEE Transactions on Industrial Informatics, 2020, 16(7): 4681-4690.

https://ieeexplore.ieee.org/document/8850096

BibTeX

@article{Zhao2020,

author = {Minghang Zhao and Shisheng Zhong and Xuyun Fu and Baoping Tang and Michael Pecht},

title = {Deep Residual Shrinkage Networks for Fault Diagnosis},

journal = {IEEE Transactions on Industrial Informatics},

year = {2020},

volume = {16},

number = {7},

pages = {4681-4690},

doi = {10.1109/TII.2019.2943898}

}

Academic Impact

E paper ke Google Scholar par 1400 sa besi citations bhet chukal aich.

Incomplete statistics ke adhar par, Deep Residual Shrinkage Network (DRSN) ke mechanical engineering, electrical power, vision, healthcare, speech, text, radar, aa remote sensing sahit bahut raas fields me 1000 sa besi publications/studies me directly apply kayal gel aich ya modify kair ke apply kayal gel aich.