Deep Residual Shrinkage Network ko variant mo ɓeydaama quality e Deep Residual Network. E hakiika, ɗum integration hakkunde Deep Residual Network, attention mechanisms, e soft thresholding functions.

E nder faamu, no Deep Residual Shrinkage Network huwata (working principle), ɗum waaway faameede nii: ngal huutorto attention mechanisms ngam anndugo unimportant features, nden ngal huutorto soft thresholding functions ngam waɗugo ɗum zero; e bannge feere, ngal anndan important features nden ngal jogoo ɗi. Process ɗo ɗon ɓeyda baawɗe deep neural network ngam heɓugo useful features nder signals je mari noise.

1. Research Motivation

Arannde, nde en waɗata classifying samples, hebugol noise—bana Gaussian noise, pink noise, e Laplacian noise—ko huunde nde rewetake. Ko ɓuri ɗum, samples ko heewi ɗon mari information je walataa current classification task, ɗum boo waaway faameede bana noise. Noise ɗo waaway wondugo be negative affect dow classification performance. (Soft thresholding ko step mawɗo nder signal denoising algorithms ɗuuɗɗum.)

Misal, nde ɗon wolwa haa kombi laawol, daande man waaway jillindirgo be soituɗe bana car horns (liito moota) e wheels (tekke). Nde en waɗata speech recognition dow signals ɗo, results man heɓan affect diga background sounds man. Diga perspective je deep learning, features je horns e wheels man haani itteede nder deep neural network ngam hase ɗi waɗa affecting speech recognition results.

Ɗiɗaɓum, koo nder dataset gooto, ɗuuɗal noise man ko heewi ɗon sendindi hakkunde samples. (Ɗum ɗon mari similarities be attention mechanisms; en hooyi image dataset bana misal, location je target object waaway sendindiggo nder images, nden attention mechanisms waaway wallugo ngam anndugo location je target object nder image koo ngoye.)

Misal, nde en trainata cat-and-dog classifier, miijo images 5 je mari label “dog”. Image arannde waaway marugo rawaandu e doombru, ɗiɗaɓum rawaandu e galdungal, tataɓum rawaandu e gertogal, nayaɓum rawaandu e mbabba, joyaɓum rawaandu e tonntonngal. Nder training, classifier man heɓan disturbance diga irrelevant objects bana doombru, galdungal, gertogal, mbabba, e tonntonngal, ɗum waɗan decrease nder classification accuracy. To en waawi anndugo irrelevant objects ɗo—doombru, galdungal, gertogal, mbabba, e tonntonngal—nden en itta features maajum, ɗum waaway ɓeydugo accuracy je cat-and-dog classifier.

2. Soft Thresholding

Soft thresholding ko step mawɗo nder signal denoising algorithms ɗuuɗɗum. Ngal ittan features je absolute values maajum ɗon les threshold, nden ngal “shrinks” (usta) features je absolute values maajum ɓuri threshold man yaha haa zero. Ngal waaway huutoreede be formula ɗo:

\[y = \begin{cases} x - \tau & x > \tau \\ 0 & -\tau \le x \le \tau \\ x + \tau & x < -\tau \end{cases}\]Derivative je soft thresholding output with respect to input kanjum woni:

\[\frac{\partial y}{\partial x} = \begin{cases} 1 & x > \tau \\ 0 & -\tau \le x \le \tau \\ 1 & x < -\tau \end{cases}\]Bana no ɗum holli dow, derivative je soft thresholding ko 1 malla 0. Property ɗo ɗon nanndi be ReLU activation function. Ngam maajum, soft thresholding boo waaway ustugo risk je deep learning algorithms heɓata gradient vanishing e gradient exploding.

Nder soft thresholding function, setting je threshold man haani heɓugo conditions ɗiɗi: Arannde, threshold man haani wonugo positive number; Ɗiɗaɓum, threshold man haanataa ɓurugo maximum value je input signal, to naa non output man fufu wartan zero.

Fahin, ko ɓuri wooɗugo to threshold man ɗon heɓa condition tataɓum: sample koo ngoye haani marugo independent threshold maajum feere dow no noise content maajum wa’i.

Dalila kanjum woni, noise content ko heewi ɗon sendindi hakkunde samples. Misal, ɗum common nder dataset gooto, Sample A mari noise seɗɗa, ammaa Sample B mari noise ɗuuɗɗum. Nder case ɗo, nde en waɗata soft thresholding nder denoising algorithm, Sample A haani huutorgol threshold pamarum, ammaa Sample B haani huutorgol threshold mawɗum. Koo ngoye features e thresholds ɗo majjini explicit physical definitions maajum nder deep neural networks, basic logic man ko gootum. Maanaa maajum, sample koo ngoye haani marugo independent threshold maajum je determine-aama be specific noise content maajum.

3. Attention Mechanism

Attention mechanisms ɗon hoyi faamugo nder field je computer vision. Visual systems je dabbaaji waaway sendindiggo targets bee yaawugo scanning area man fufu, nden ɓe focus attention dow target object ngam heɓugo details ɗuuɗɗum, while ɓe usta (suppress) irrelevant information. Ngam specifics, useni ɗaɓɓitu literature regarding attention mechanisms.

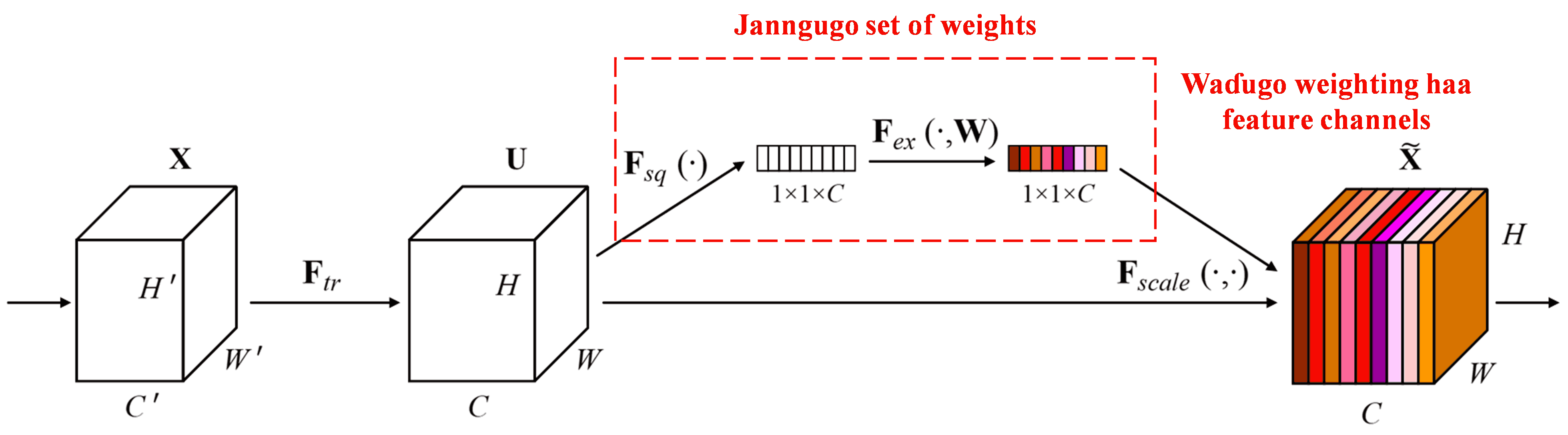

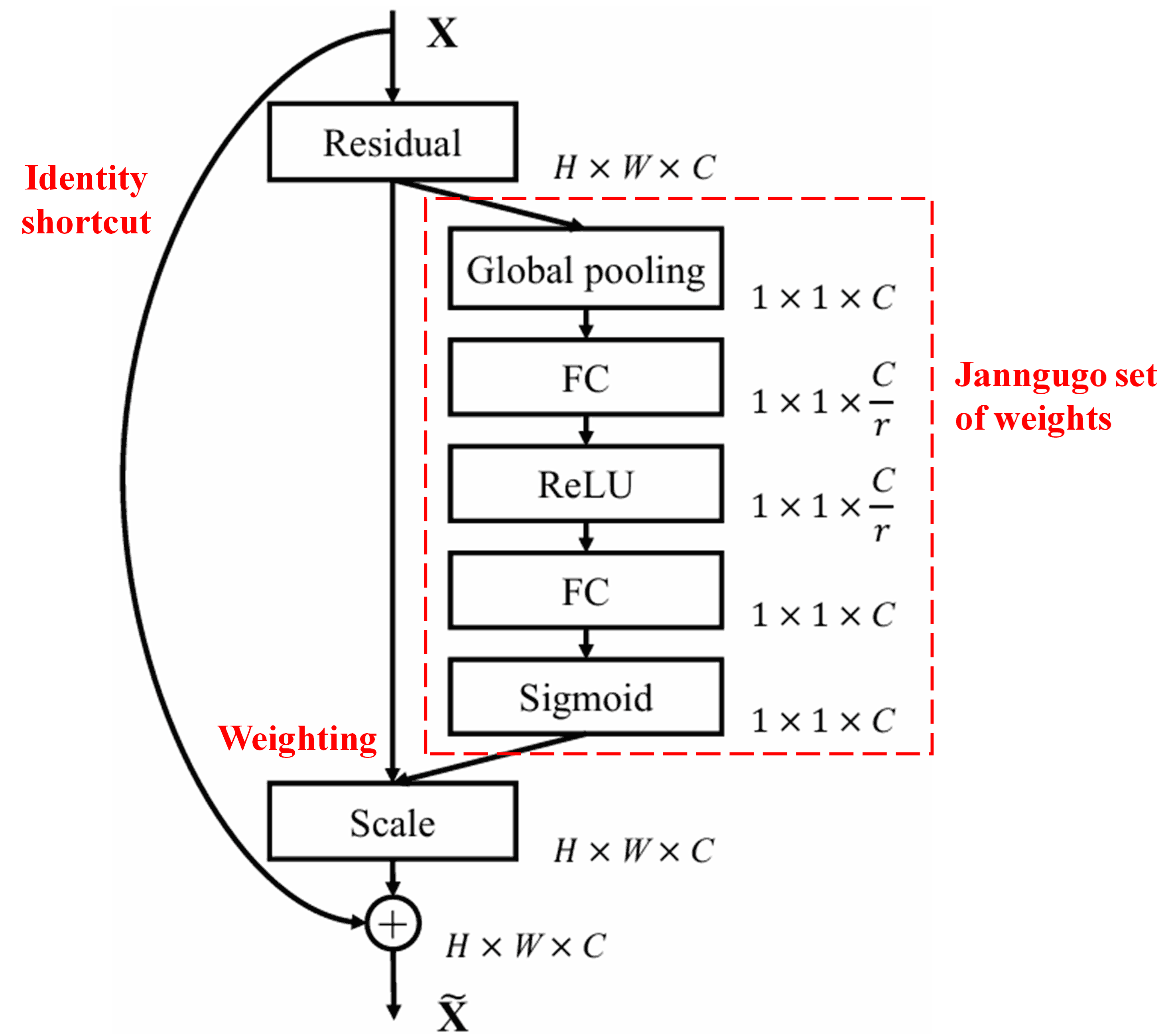

Squeeze-and-Excitation Network (SENet) ko method deep learning kesum je huutorto attention mechanisms. Hakkunde samples feere-feere, contribution je feature channels feere-feere haa classification task ko heewi ɗon sendindi. SENet ɗon huutoro sub-network pamarum ngam heɓugo set of weights, nden ngal multiply weights ɗo be features je respective channels ngam adjust magnitude je features nder channel koo ngoye. Process ɗo waaway faameede bana applying levels feere-feere je attention haa feature channels feere-feere.

Nder approach ɗo, sample koo ngoye ɗon mari set of weights maajum feere. Maanaa maajum, weights je arbitrary samples ɗiɗi ɗon feere-feere. Nder SENet, specific path ngam heɓugo weights kanjum woni “Global Pooling → Fully Connected Layer → ReLU Function → Fully Connected Layer → Sigmoid Function.”

4. Soft Thresholding with Deep Attention Mechanism

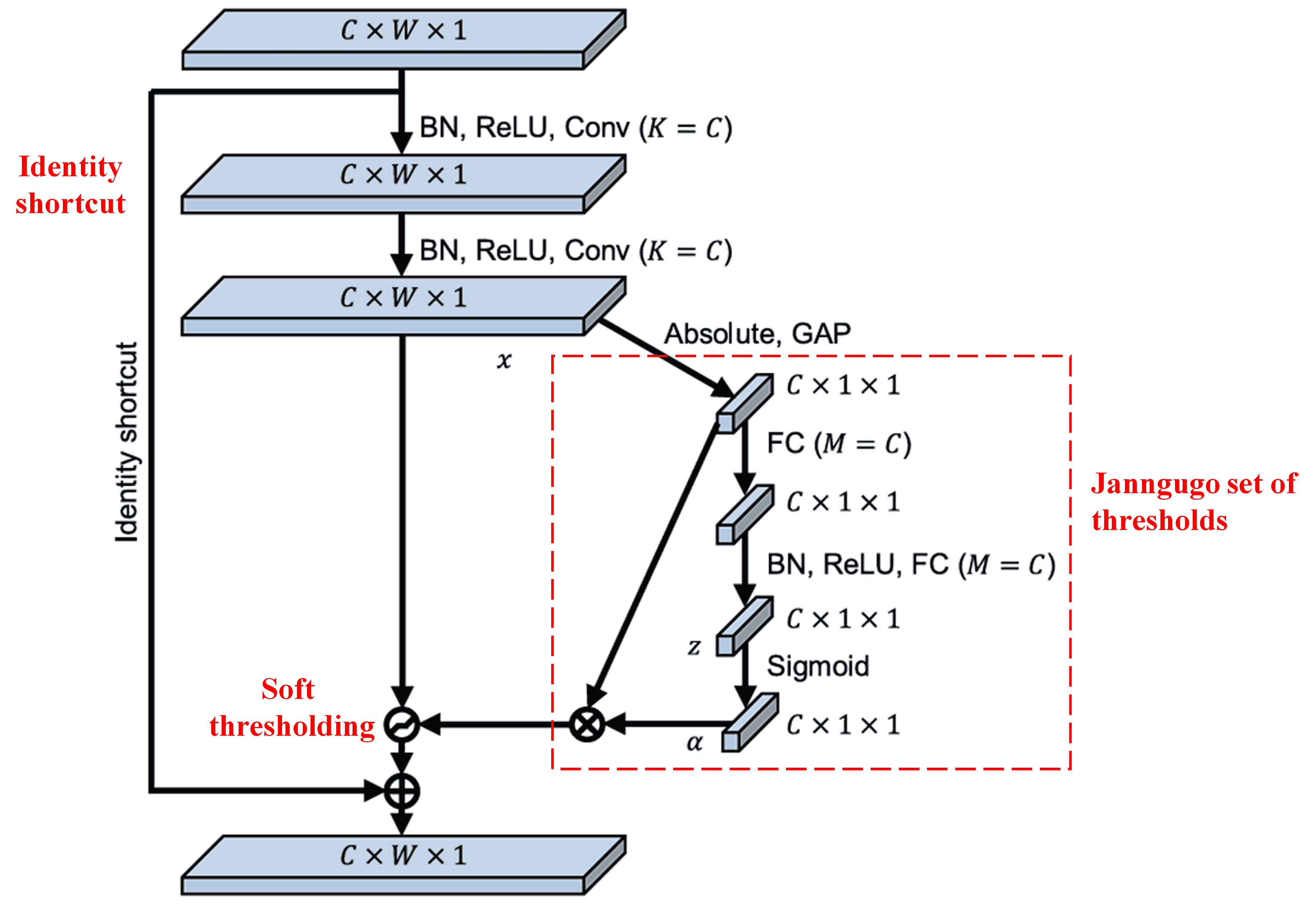

Deep Residual Shrinkage Network heɓi inspiration diga SENet sub-network structure je wi’aama haa dow ngam waɗugo soft thresholding les deep attention mechanism. Through sub-network (je ɗon nder red box), set of thresholds waaway laataago learned ngam apply soft thresholding haa feature channel koo ngoye.

Nder sub-network ɗo, absolute values je features fufu nder input feature map ɗon calculated. Nden, through global average pooling e averaging, feature ɗon heɓee, je wi’etee A. Nder path feere, feature map ɓaawo global average pooling ɗon naata nder small fully connected network. Fully connected network ɗo ɗon huutoro Sigmoid function bana layer maajum ɓaawo, ngam normalize output hakkunde 0 e 1, ɗum heɓa coefficient je wi’etee α. Final threshold waaway wonugo expressed as α×A. Ngam maajum, threshold ko product je number hakkunde 0 e 1 bee average je absolute values je feature map. Method ɗo ɗon hakkikina dow threshold man wonaa tan positive, ammaa ngal wonaa too large (mawnay masin).

Fahin, samples feere-feere ɗon mari thresholds feere-feere. Ngam maajum, e nder faamu, ɗum waaway faameede bana specialized attention mechanism: ngal anndan features irrelevant to current task, ngal transform ɗi yaha values close to zero via convolutional layers ɗiɗi, nden ngal waɗa ɗi zero using soft thresholding; e bannge feere, ngal anndan features relevant to current task, ngal transform ɗi yaha values far from zero via convolutional layers ɗiɗi, nden ngal joga (preserve) ɗi.

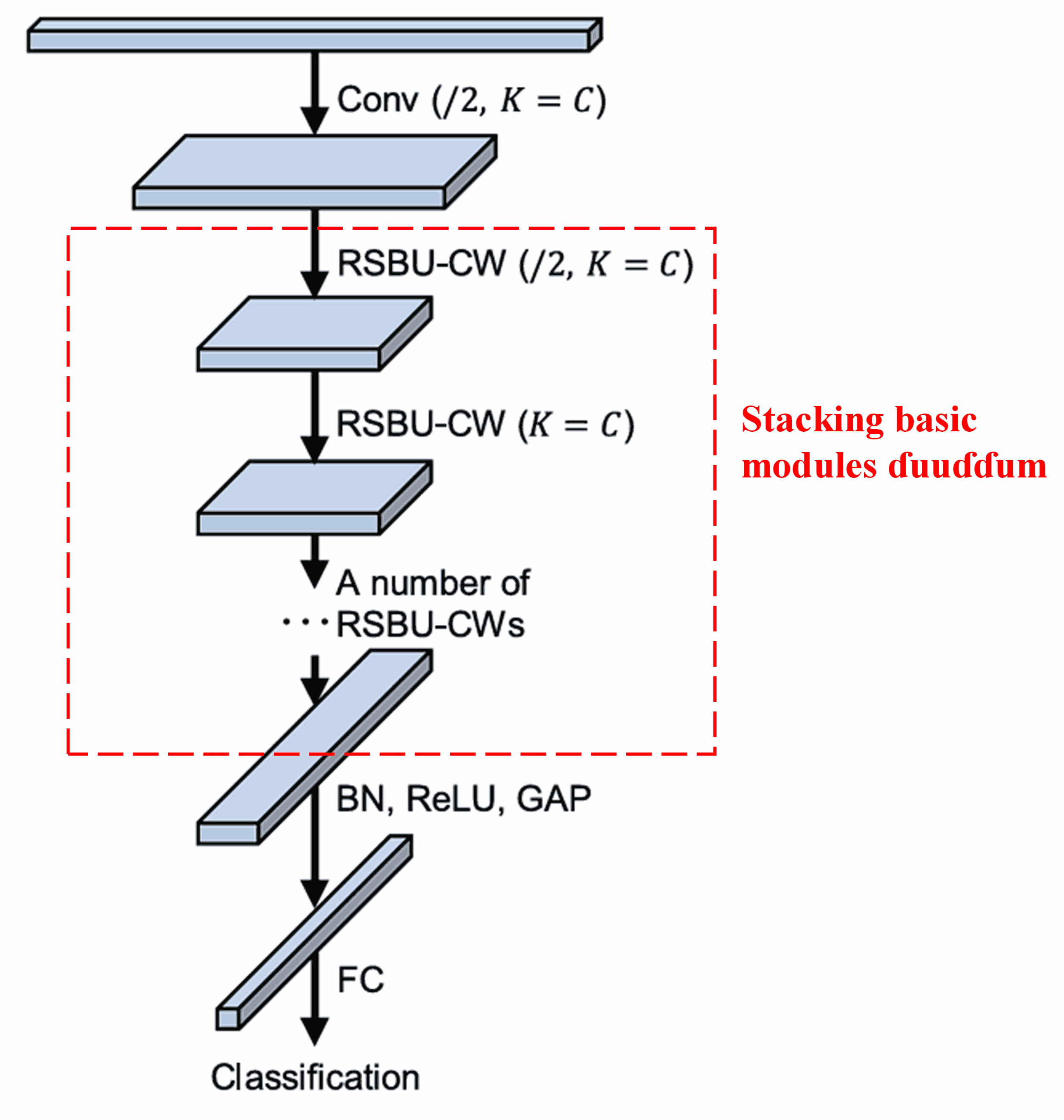

Finally, be stacking number je basic modules flondirɗum be convolutional layers, batch normalization, activation functions, global average pooling, e fully connected output layers, Deep Residual Shrinkage Network timmuɗum ɗon constructed.

5. Generalization Capability

Deep Residual Shrinkage Network ko, e hakiika, general feature learning method. Dalila kanjum woni, nder feature learning tasks ɗuuɗɗum, samples ko heewi ɗon mari noise seɗɗa e irrelevant information. Noise e irrelevant information ɗo waaway affect performance je feature learning. Misal:

Nder image classification, to image ɗon mari objects feere-feere nder sa’a gooto, objects ɗo waaway faameede bana “noise.” Deep Residual Shrinkage Network waaway huutorgol attention mechanism ngam “notice” noise ɗo, nden ngal huutoro soft thresholding ngam waɗugo features corresponding to noise ɗo zero, ɗum waaway ɓeydugo image classification accuracy.

Nder speech recognition, specifically nder environments je mari noise bana conversational settings haa kombi laawol malla nder factory workshop, Deep Residual Shrinkage Network waaway ɓeydugo speech recognition accuracy, malla koo seɗɗa, ngal hokka methodology je waaway ɓeydugo speech recognition accuracy.

Reference

Minghang Zhao, Shisheng Zhong, Xuyun Fu, Baoping Tang, Michael Pecht, Deep residual shrinkage networks for fault diagnosis, IEEE Transactions on Industrial Informatics, 2020, 16(7): 4681-4690.

https://ieeexplore.ieee.org/document/8850096

BibTeX

@article{Zhao2020,

author = {Minghang Zhao and Shisheng Zhong and Xuyun Fu and Baoping Tang and Michael Pecht},

title = {Deep Residual Shrinkage Networks for Fault Diagnosis},

journal = {IEEE Transactions on Industrial Informatics},

year = {2020},

volume = {16},

number = {7},

pages = {4681-4690},

doi = {10.1109/TII.2019.2943898}

}

Academic Impact

Paper ɗo heɓi citations ɓurɗum 1,400 haa Google Scholar.

Based on incomplete statistics, Deep Residual Shrinkage Network (DRSN) huutoraama directly malla modified nden huutoraama nder publications/studies ɓurɗum 1,000 nder fields ɗuuɗɗum, bana mechanical engineering, electrical power, vision, healthcare, speech, text, radar, e remote sensing.