Deep Residual Shrinkage Network (DRSN) asal wich Deep Residual Network (ResNet) da hi ikk improved version hai. Asal wich eh ResNet, Attention Mechanism ate Soft Thresholding functions da ikk integration (mel-jol) hai.

Ikk hadd takk, Deep Residual Shrinkage Network de kamm karan de tarike nu is tarah samjheya ja sakda hai: Attention Mechanism rahin, ohna features te dhyan ditta janda hai jo unimportant han, ate Soft Thresholding functions rahin ohna nu zero kar ditta janda hai. Ja fer, Attention Mechanism rahin important features nu note kitta janda hai ate ohna nu sambhal ke rakheya janda hai. Is tarah eh Deep Neural Network di noisy signals cho useful features kaddan di shamta (ability) nu wadhaunda hai.

1. Research Motivation

Sab ton pehlan, jadon assi samples nu classify karde haan, taan samples wich kujh na kujh Noise jarur hunda hai, jivein ki Gaussian Noise, Pink Noise, Laplacian Noise wagara. Zyadatar cases wich, samples wich ajehi information ho sakdi hai jo current classification task naal related nahi hundi; isnu vi assi “Noise” keh sakde haan. Eh Noise classification de result te bura asar pa sakda hai. (Soft Thresholding kayi signal denoising algorithms da ikk key step hunda hai).

Example de taur te, man lo tussi sadak de kinare khade ho ke gallan kar rahe ho. Tuhadi awaz de naal-naal gaddiyan de horn ate pahiye (wheels) di awaz vi mix ho jandi hai. Jadon assi inna signals te Speech Recognition apply karde haan, taan horn ate pahiye di awaz karke recognition result te asar painda hai. Deep Learning de nazariye ton dekheya jave, taan Deep Neural Network de andar horn ate pahiye de corresponding features nu delete kar dena chahida hai, taan jo speech recognition theek tarah ho sake.

Duja, ikk hi Dataset de alag-alag samples wich Noise di maatra (amount) alag-alag ho sakdi hai. (Eh cheez Attention Mechanism naal mildi-juldi hai; jivein image dataset wich, target object har photo wich alag jagah te ho sakda hai, ate Attention Mechanism har photo de hisab naal target object di location te focus karda hai).

Udahran (Example) layi, jadon assi ikk Cat-Dog classifier train karde haan, taan man lo “Dog” label waliyan 5 photos han. Pehli photo wich kutte de naal chuha (mouse) ho sakda hai, duji wich kutte de naal hans (goose), teeji wich murgi (chicken), chauthi wich khota (donkey), ate panjvi wich batakh (duck) ho sakdi hai. Jadon assi classifier train karange, taan inna chuhe, hans, murgi, khote ate batakh warge irrelevant objects karke interference hovegi, jis naal Classification Accuracy ghat sakdi hai. Agar assi inna irrelevant objects (chuha, hans, murgi, etc.) nu note kar sakiye ate ohna de features nu delete kar sakiye, taan Cat-Dog classifier di accuracy wadh sakdi hai.

2. Soft Thresholding

Soft Thresholding kayi signal denoising algorithms da core step hai. Is wich, jis feature di absolute value ikk khaas Threshold ton ghat hundi hai, usnu delete kar ditta janda hai. Ate jis feature di absolute value Threshold ton zyada hundi hai, usnu zero wal shrink (ghatauna) kar ditta janda hai. Isnu heth likhe formula naal implement kitta ja sakda hai:

\[y = \begin{cases} x - \tau & x > \tau \\ 0 & -\tau \le x \le \tau \\ x + \tau & x < -\tau \end{cases}\]Soft Thresholding di output da input de layi derivative (slope) eh hunda hai:

\[\frac{\partial y}{\partial x} = \begin{cases} 1 & x > \tau \\ 0 & -\tau \le x \le \tau \\ 1 & x < -\tau \end{cases}\]Upar ditte formula ton pata lagda hai ki Soft Thresholding da derivative ya taan 1 hunda hai ya 0. Eh property bilkul ReLU Activation Function wargi hai. Is karke, Soft Thresholding deep learning algorithms wich Gradient Vanishing ate Gradient Exploding de risk nu ghataunda hai.

Soft Thresholding function wich, Threshold set karde hoye do shartan (conditions) da hona jaruri hai: Pehli, Threshold positive hona chahida hai; Duji, Threshold input signal di maximum value ton wadda nahi hona chahida, nahi taan sari output zero ho javegi.

Naale, Threshold nu teeji shart vi purri karni chahidi hai: Har sample da apna wakhra (independent) Threshold hona chahida hai jo us sample de Noise content de hisab naal hove.

Isda kaaran eh hai ki har sample wich Noise di maatra alag-alag hundi hai. Example layi, ikk dataset wich Sample A wich ghat Noise ho sakda hai ate Sample B wich zyada Noise ho sakda hai. Is case wich, denoising algorithm wich Soft Thresholding karde samay, Sample A layi chhota Threshold ate Sample B layi wadda Threshold hona chahida hai. Bhaven ki Deep Neural Network wich inna features ate thresholds da koi exact physical matlab nahi reh janda, par basic logic same hi rehnda hai. Matlab ki, har sample da apna independent Threshold hona chahida hai.

3. Attention Mechanism

Attention Mechanism nu Computer Vision field wich samjhna kaafi aasaan hai. Janwaran (Animals) da visual system tezi naal puray area nu scan karda hai ate target object nu labhda hai. Phir oh apna dhyan (attention) sirf target object te focus karde han taan jo hor details mill sakan ate faltu information nu ignore kitta ja sake. Jyada jaankari layi tussi Attention Mechanism bare articles padh sakde ho.

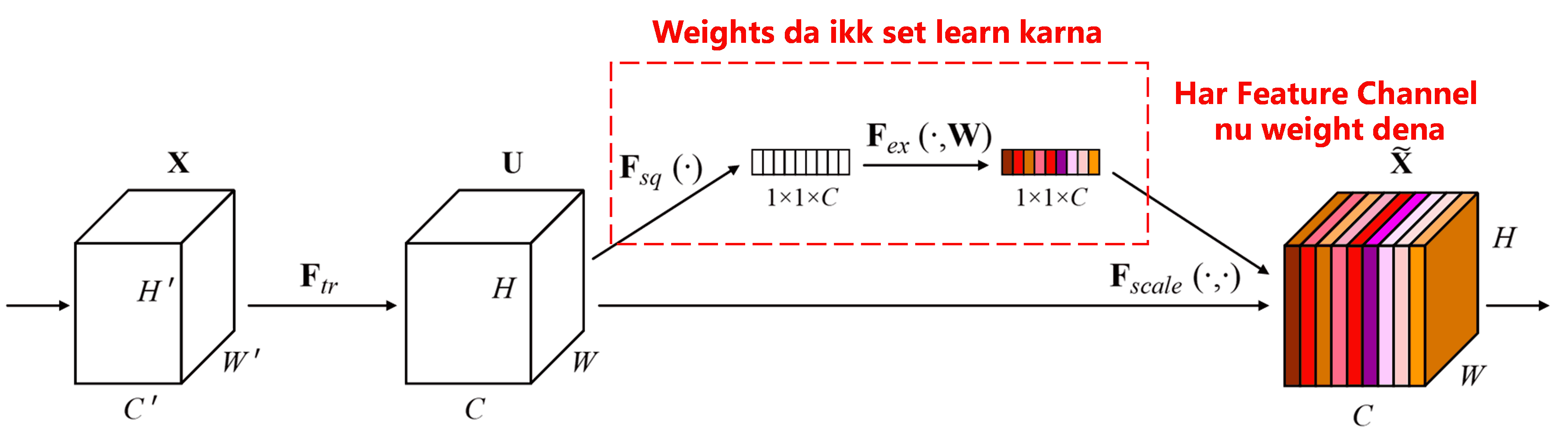

Squeeze-and-Excitation Network (SENet) deep learning wich Attention Mechanism da ikk naya tareeka hai. Classification task wich, alag-alag samples wich alag-alag feature channels da yogdaan (contribution) wakhra ho sakda hai. SENet ikk chhota sub-network use karda hai taan jo weights da ikk set prapt kitta ja sake. Phir eh weights har channel de features naal multiply kitte jande han taan jo feature size adjust ho sake. Is process nu assi keh sakde haan ki alag-alag feature channels te alag-alag level di attention ditti ja rahi hai.

Is tareeke naal, har sample de apne independent weights hunde han. Matlab ki, kise vi do samples de weights same nahi honge. SENet wich, weights prapt karan da rasta eh hai: “Global Pooling → Fully Connected Layer → ReLU Function → Fully Connected Layer → Sigmoid Function”.

4. Deep Attention Mechanism heth Soft Thresholding

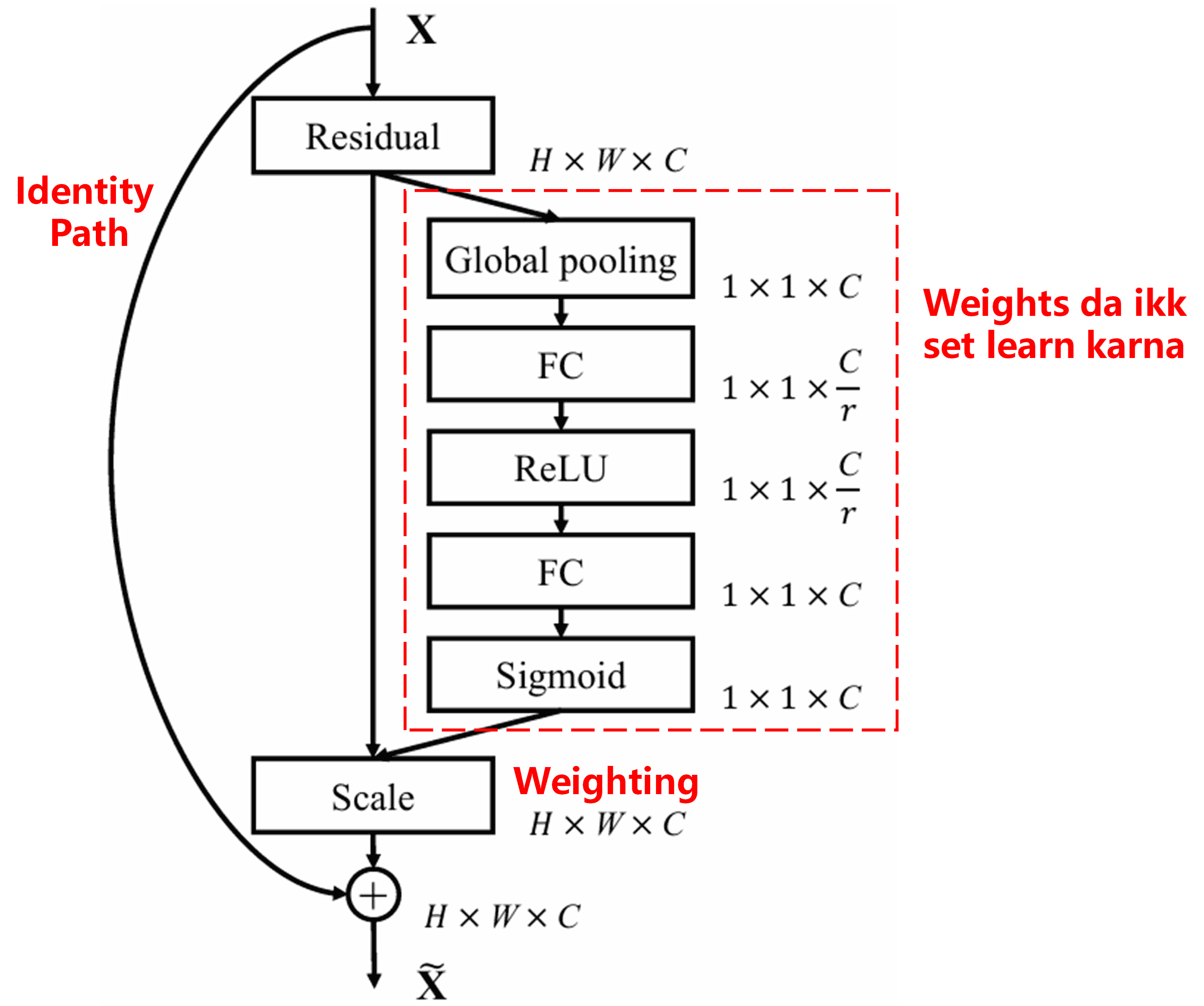

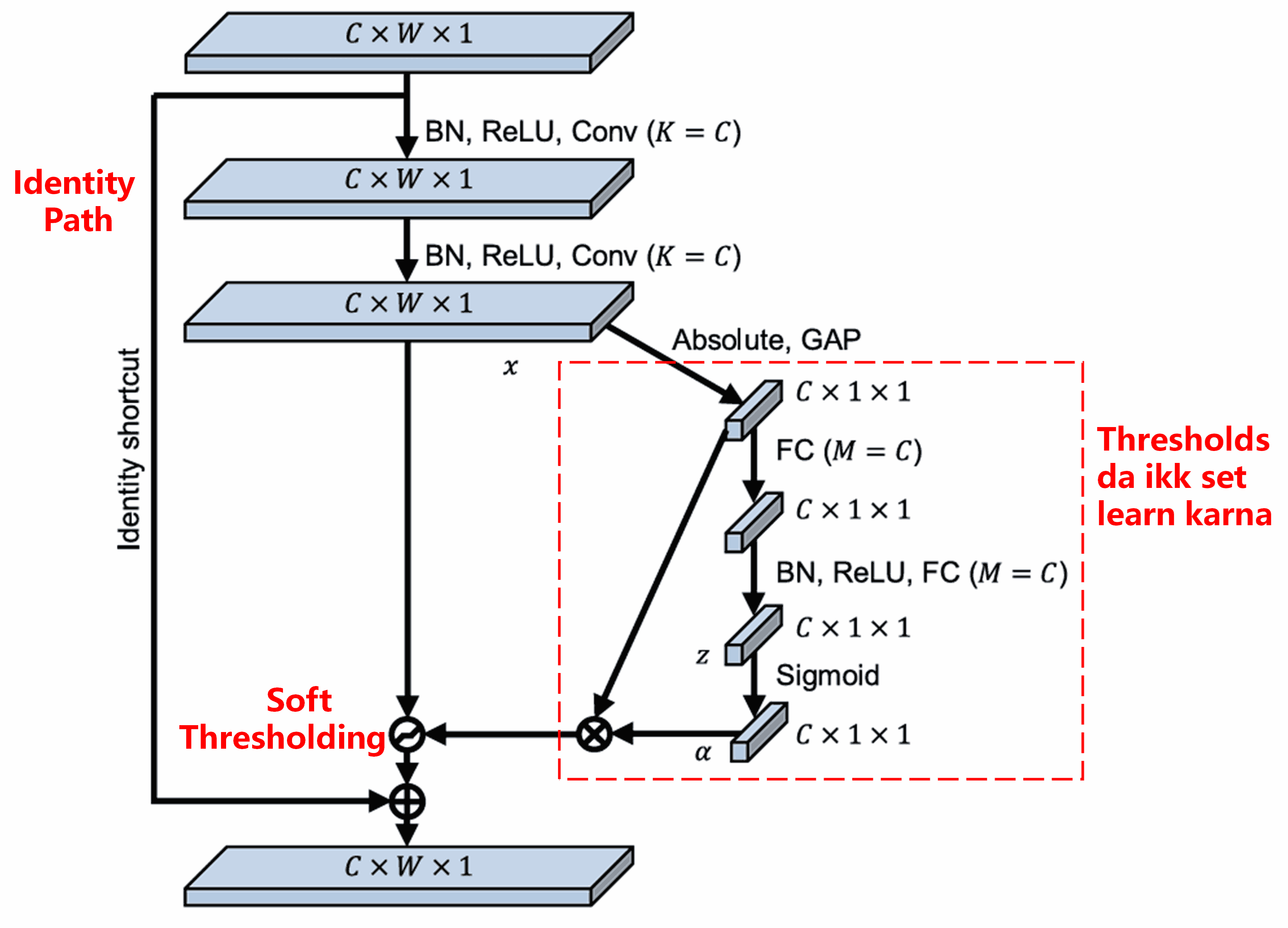

Deep Residual Shrinkage Network ne SENet de sub-network structure ton idea litta hai taan jo Deep Attention Mechanism de heth Soft Thresholding kitti ja sake. Lal rang (red box) wale sub-network de zariye, thresholds da ikk set learn kitta ja sakda hai, jo har feature channel te Soft Thresholding apply karda hai.

Is sub-network wich, sab ton pehlan input feature map de sare features di absolute value kaddi jandi hai. Phir Global Average Pooling ate average karke ikk feature milda hai, jisnu assi A keh sakde haan. Duje paase, Global Average Pooling ton baad feature map nu ikk chhote Fully Connected Network wich bheja janda hai. Is network di last layer Sigmoid function hundi hai, jo output nu 0 ate 1 de wich normalize kardi hai. Is ton sanu ikk coefficient milda hai, jisnu assi α keh sakde haan. Final threshold nu α×A likheya ja sakda hai. Matlab, threshold = (0 to 1 de wich koi number) × (feature map di absolute value di average). Eh tareeka ensure karda hai ki Threshold positive hove ate eh bahut wadda vi na hove.

Isde naal hi, alag-alag samples de alag-alag thresholds honge. Is layi, isnu ikk tarah da special Attention Mechanism keha ja sakda hai: eh ohna features nu note karda hai jo current task layi irrelevant han, ate two convolutional layers rahin ohna nu 0 de kareeb le janda hai, phir Soft Thresholding rahin ohna nu bilkul zero kar dinda hai. Ya fer, eh relevant features nu note karda hai ate ohna nu preserve (bacha ke) rakhda hai.

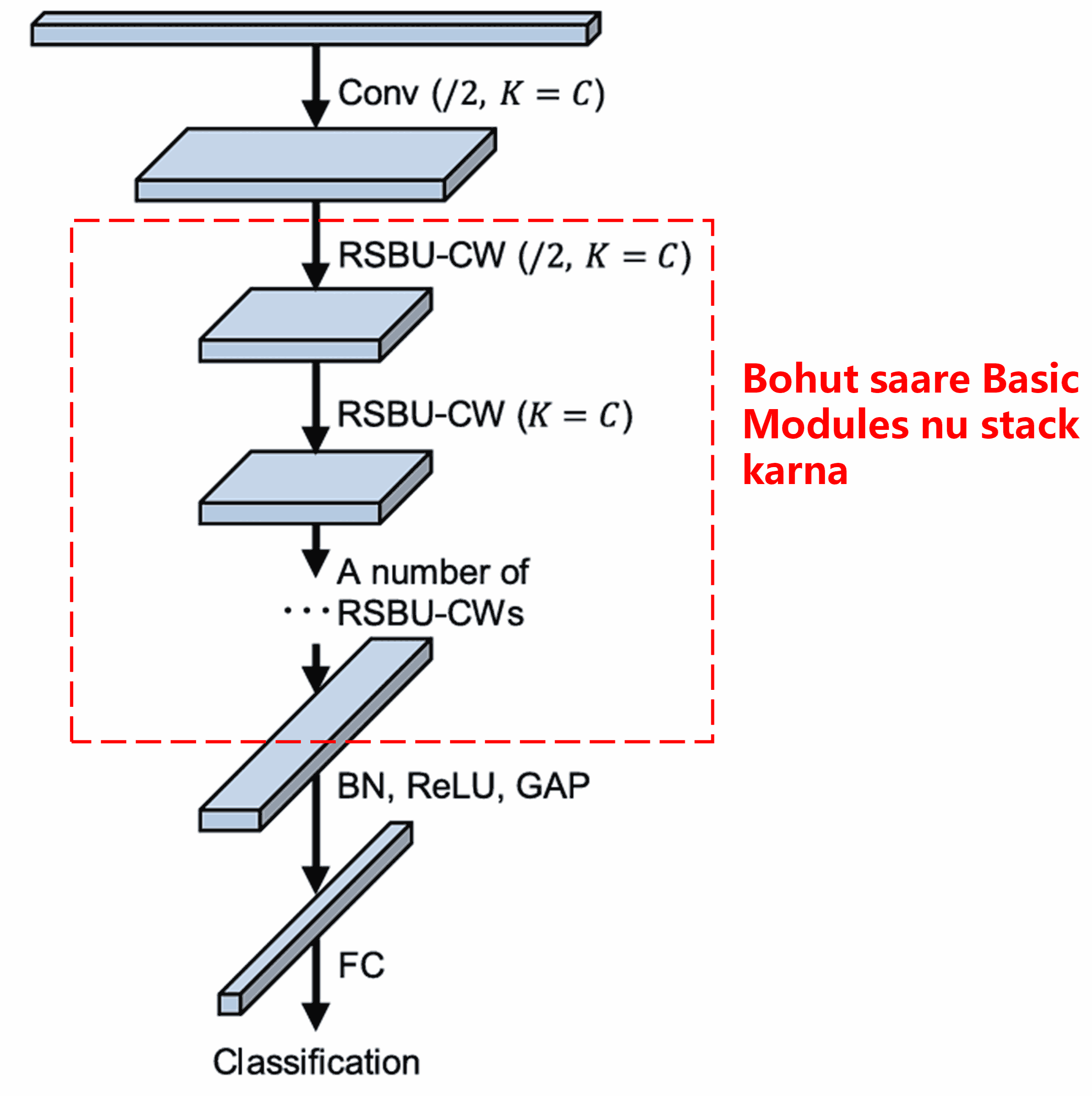

Aakhir wich, basic modules, convolutional layers, Batch Normalization, activation functions, Global Average Pooling ate Fully Connected Output Layer nu stack karke poora Deep Residual Shrinkage Network banaya janda hai.

5. Generalization Capability

Asal wich, Deep Residual Shrinkage Network ikk general feature learning method hai. Kyunki zyada tar feature learning tasks wich, samples wich thoda bahut Noise ya irrelevant information jarur hundi hai. Eh Noise feature learning di performance te asar pa sakda hai. Example layi:

Image Classification wich, agar photo wich target object de naal hor kayi objects vi han, taan ohna nu “Noise” samjheya ja sakda hai. Deep Residual Shrinkage Network, Attention Mechanism di madad naal inna “Noise” objects nu note kar sakda hai ate Soft Thresholding rahin inna de features nu zero kar sakda hai. Is naal Image Classification di accuracy wadhan de chances han.

Speech Recognition wich, agar mahol shor-sharabe wala hai (Noisy environment), jivein sadak kinare ya factory workshop wich gallan karna, taan Deep Residual Shrinkage Network speech recognition di accuracy nu improve kar sakda hai, ya kam-az-kam ikk ajeha tareeka pradan karda hai jo accuracy wadhaunda hai.

Reference

Minghang Zhao, Shisheng Zhong, Xuyun Fu, Baoping Tang, Michael Pecht, Deep residual shrinkage networks for fault diagnosis, IEEE Transactions on Industrial Informatics, 2020, 16(7): 4681-4690.

https://ieeexplore.ieee.org/document/8850096

BibTeX

@article{Zhao2020,

author = {Minghang Zhao and Shisheng Zhong and Xuyun Fu and Baoping Tang and Michael Pecht},

title = {Deep Residual Shrinkage Networks for Fault Diagnosis},

journal = {IEEE Transactions on Industrial Informatics},

year = {2020},

volume = {16},

number = {7},

pages = {4681-4690},

doi = {10.1109/TII.2019.2943898}

}

Impact

Is paper nu Google Scholar te 1400 ton zyada vaar cite kitta gya hai.

Ik andaze de mutabik, Deep Residual Shrinkage Networks (DRSN) nu 1000 ton zyada research papers wich use kitta gya hai ya improve kitta gya hai. Eh papers Mechanical Engineering, Electric Power, Computer Vision, Medical, Speech, Text, Radar ate Remote Sensing varge kayi fields naal related han.